To go directly to my projects, click here. Refer to the navigation bar on the left to see any of my portolfio pages!

About me

I'm currently working as a data engineer/data scientist at Frontier Communications down in Tampa, FL. I work mainly on developing and maintaining ETL scripts in an on-prem SQL Server, pulling in data from various sources within the company into one place for analysts and other stakeholders. I'm also assisting in the migration of our on-prem data to a Databricks environment. Additionally, I do some work with churn ML models and ad-hoc analytics requests, but I've enjoyed data engineering and infrastructure quite a bit, so I've trended in that direction.

In May 2023, I received my MS in Computer Engineering at Duke University, where I gained a deep understanding of the underlying principles of computer systems, algorithms, and machine learning. Throughout my academic career, I have focused on developing my skills in data, machine learning, and DevOps/automation. I've worked on numerous projects exploring the intersection of these interests, which I hope to demonstrate through this portfolio.

In addition to my academic pursuits, I've gained practical experience through my internship with the LA Clippers doing data analytics work (summers 2021 & 2022). You can read more about my time with the Clippers here.

As a dedicated and detail-oriented engineer, I'm committed to delivering high-quality solutions to complex problems. I'm passionate about using my skills to advance the field of machine learning and contribute to the development of new and innovative technologies.

In my free time, I enjoy keeping up with the latest developments in data infrastructure and machine learning. I'm also somewhat of a basketball fanatic and find it to be a fascinating space for the application of ML. I'm excited about the opportunities that lie ahead and look forward to making a meaningful impact as a machine learning and data practitioner.

Undergraduate Studies

I started my undergraduate degree at Lasell University as a business major and a part of the men's basketball team. During my second semester, I took an introductory computing class and absolutely fell in love with the field of computer science. I then knew I wanted programming and analytics to be part of my career. I later transferred to Bryant University where I received a Bachelor's degree in data science with minors in business administration and mathematics. Outside of academics, I was part of the men's ultimate frisbee team and helped establish the school's first club basketball team.

Projects Summary

Below, I'll list some of my favorite projects I've had the opportunity to work on. Most projects have an associated demo showing how all the moving pieces work together. I'll also place a link to each repo in case you'd like to take a deeper look!

Image Classifier Web App

This is a web application that allows users to upload an image and receive a classification prediction for that image using a pre-trained deep learning model. The application uses Flask as the backend framework, which invokes an AWS Lambda function to make requests to a pre-deployed Amazon SageMaker endpoint for image classification. The predicted labels are then returned to the user and displayed on the web page.

Demo:

Improving Student Course Feedback through EEG Brain Signal Models

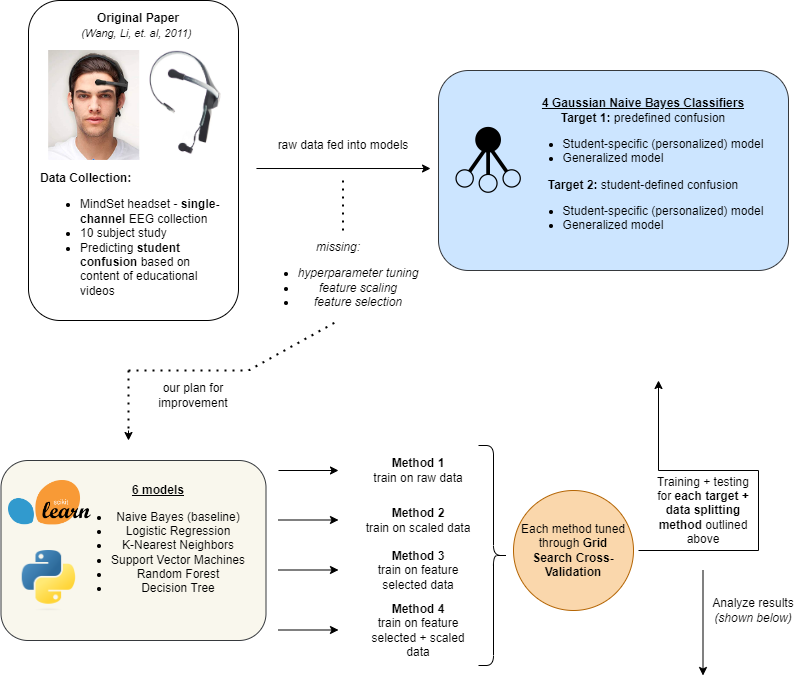

Our research focused on addressing the challenge of obtaining feedback on lesson clarity in online learning platforms. We built upon previous work by graduate student researchers at Carnegie Mellon University who designed a classifier using an EEG headset recorder device to detect student confusion.

Our objective was to reproduce and enhance their results by implementing a more rigorous model selection process, feature selection, scaling, and hyperparameter optimization using grid search cross-validation. Through these methods, we increased model performance by 27% and included an analysis to ensure our results were generalizable.

Link to the project

this project does not have a demo, but the slides and report can be found here.

Summarize CLI - a Hugging Face and AWS Sagemaker tool

A command line tool that takes a Hugging Face summarizer model (pegasus-xsum by default), deploys it to AWS Sagemaker, and queries it for inference. I made this tool utilizing Python, Bash, and a cool program named Bashly to help me develop the CLI arguments and flags. In the future, I'd like to add more functionality to use custom models and send multiple queries at once for longer texts.

Demo:

NBA Over/Under Prediction App

Streamlit app that automatically pulls newest data, predicts on it, and serves betting predictions to the user. Utilizes GitHub Actions for continuous integration and continuous delivery to AWS EC2. For this project, I used Python and a fairly simple Sci-kit Learn linear regression model (emphasis was more on the CI/CD than model tuning).

Demo:

Serverless Machine Learning Model

A linear model used to classify breast cancer instances using UCI'S breast cancer diagnostic data set. Model is trained using AWS Sagemaker and invoked using Amazon API Gateway and an AWS Lambda function.

Azure Databricks Cluster CLI

CLI built in Rust and Bash that allows you to provision custom clusters in Databricks using the Databricks REST API.

Demo:

Machine Learning API

FastAPI application that predicts Jayson Tatum's points per game based on continuously updated data from ESPN. Deploys automatically to AWS AppRunner.

Demo:

Finding Traumatic Brain Injury in CT Scans

For my image & video processing course at Duke, my teammates and I used multi-Otsu thresholding to segment potential instances of TBI from brain CT scans. This involved a variety of preprocessing steps to ensure consistency as well as researching and testing both machine learning methods and other segmentation methods to find an optimal solution.

Estimating the Impact of Opioid Control Policies

Along with a small group, I analyzed opioid-related policies across various states to determine whether they had an impact on both the prescription rates and overdose rates of opioids. This project includes a PowerPoint presentation going over our findings in a more succinct way.

During my time with the Clippers, I worked on various machine learning and analytics projects. Two of my most prevalent are listed below:

-

Season Ticket Holder Objections: I analyzed call transcripts from season ticket holders to identify common objections raised by customers. By carefully reviewing these transcripts and using text analytics methods, I was able to uncover recurring themes and patterns. This allowed me to provide valuable insights to the company and make recommendations for improving customer satisfaction and retention.

-

Attendance Predictions & Key Variables: In this project, I deployed machine learning models to analyze key variables that impact attendance at Clippers games. By utilizing data from various sources and employing advanced machine learning techniques, I was able to uncover important insights and patterns in attendance behavior. This information helped the company make data-driven decisions on how to improve attendance rates and optimize their operations.

Additionally, I worked on various ad-hoc requests and foundational analytics projects. The main analytics project I worked on during my first summer with the Clippers was in regards to developing a new ticketing model for the Intuit Dome - the Clippers' new stadium opening in Inglewood for the 2024-25 NBA Season. My team and I proposed a subscription-based ticketing model that would aim to improve the fan experience and reach a wider target market than we previously had been.

I'm proud to say that the subscription-based model will be implemented for the Clippers' first season in the Intuit Dome! By targeting a specific demographic and opening up this opportunity for fans to attend games, we hope to see an increase in Clipper spirit as well as a $1-2 million increase during the first year of implementation.

Rust Miniprojects

Rust is an emerging language that offers extremely fast speed and makes it easy to write safe and performant code. One of my goals when finishing up my MS program was to make a variety of Rust miniprojects to give myself a solid foundation in the language.

As a machine learning enthusiast, I've been very interested in the speed that Rust is able to offer in the context of ML training. One of my focuses in these miniprojects was to build out mini machine learning solutions for a variety of applications. Here are a handful of them:

- A program that generates text based on User input

- An containerized AutoML program that gives info on the best potential models for your data

- A question answering program using BERT

- Hugging Face Model Downloader to ONNX

One of my other goals was to build out mini cloud based solutions. A good example is my Rust - AWS Lambda Handshake Program, which is useful because cold starts are much faster in Rust as compared to other languages - and thus incur less cost over time.

Some other examples of Rust cloud solutions I've made are:

While my main language is Python, I've found Rust has taken over as my main language when I need something to run very fast, replacing my previous favorite C++. It's much more fun to write code in Rust and the compiler is so strict that it's actually quite difficult to write code that doesn't work. I believe Rust will gain a lot of traction in the coming years in the field of DS/ML.